- Categories:

- art, augmented reality, memory, technology

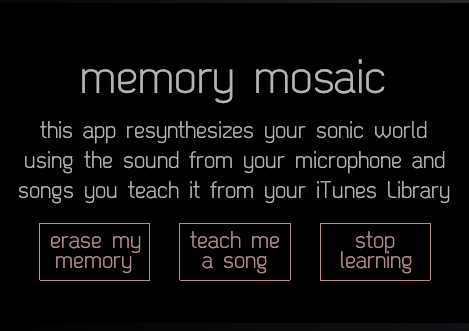

A product of my PhD research is now available on the iPhone App Store (for a small cost!): View in App Store.

This application is motivated by my interests in experiencing an Augmented Perception and of course very much inspired by some of the work here at Goldsmiths. The application of existing approaches in soundspotting/mosaicing to a real-time stream and situated in the real-world allows one to play with their own sonic memories, and certainly requires an open ear for new experiences. Succinctly, the app records segments of sounds in real-time using it’s own listening model, as you walk around in different environment (or sit at your desk). These segments are constantly built up the longer the app is left running to form a database (working memory model) for which to understand new sounds. Incoming sounds are then matched to this database and the closest matching sound is played instead. What you get is a polyphony of sound memories triggered by the incoming feed of audio, and an app which sounds more like your environment the longer it is left to run. A sort of gimmicky feature of this app is the ability to learn a song from your iTunes Library. What this lets you do is experience your sonic world as your favorite hip-hop song or whatever you listen to.

Hope you have a chance to try it out and please forward to anyone of interest.