Introduction

This is the homepage for the V&A course on Developing Audiovisual Apps for the iPhone/iPad using openFrameworks. This site will hold code samples and additional resources for development.

Please make sure you already have a coding environment setup to use openFrameworks v007: openFrameworks. You will want to get the OSX version to begin with, and when we start developing on the iPhone/iPad, we will want to use the iOS download.

C++ Resources & Books

C++ Tutorial

Another C++ Tutorial

Bjarne Stroustrup’s The C++ Programming Language

Deitel & Deitel’s C++ How to Program

openFrameworks Documentation

openFrameworks Forum

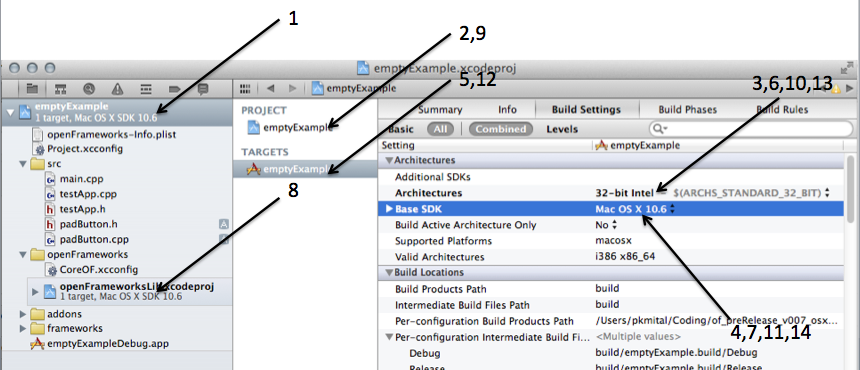

If you are having trouble compiling the OSX-based openFrameworks examples (not the iOS examples), make sure your project settings are set to use the 10.6 SDK and use a build architecture of 32-bit. The following image shows you how to change both the project and target settings of both your openFrameworks and emptyExample project.

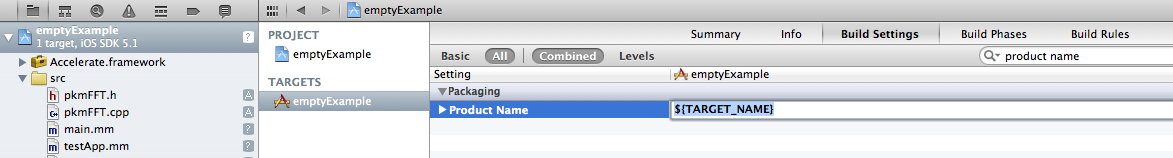

In order to change your application’s name, you will need to navigate to your Target’s Build Settings. The picture below is meant to help you find the “Product Name” setting. Click on your XCode project, then Target, then Build Setting Tab. Search for “Product Name”, and you can change the value here.

Also, when you need screenshots for your App to publish on the App Store, you can use your iPhone/iPad to take screenshots. Hold the Home and Lock buttons for about 1.5 seconds, and you will see the screen flash white. If so, you will find a screenshot in your Photo Library on your device.

Week 1: iPhone, App Store, XCode, openFrameworks

Introduction

This week, we were introduced to some of the capabilities in the iPhone and iPad hardware, and also saw some successful apps in the App Store. We then had a gentle introduction to XCode and openFrameworks, creating our first program which displayed a camera and video image. We learned about creating a new project by copying the emptyExample folder to our own folder maintaining the same directory hierarchy. Also, we saw the the “data” folder inside our “bin” folder is a convenient location to store things like movies, sounds, and images that are relevant to our application.

Lecture Slides

Week 2: Classes, Vectors, and Buttons

Introduction

Week 2 saw a lot more coding as we jumped into developing an interactive application that would draw multiple buttons. We will eventually use these buttons for the control of sound, being able to record and play back sound samples with the press of our touchscreen. We learned about defining our own classes by creating a “.h” and “.cpp” file. By the end of the class, we were just introduced to vectors, but will have more time to expand on these concepts in the next class in 2 weeks time.

Be sure to place these images in your “data” folder: button-images.zip. Here is also a zip file of the code reproduced below: week2.zip. Your homework is to study “for loops“, “arrays“, and “vectors“, in order to reduce the code in the testApp.cpp file. If you are feeling more ambitious, try and make each button do something, such as play a sound (hint: ofSoundPlayer, and also be sure to check the openFrameworks examples on audio input and output!). We’ll look at how to do these together in 2 weeks time, as well as play with audio input and use a lot more for loops.

Button Pads

testApp.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#pragma once

#include "ofMain.h"

#include "padButton.h"

// these are macros, which we treat as constant variables

#define WIDTH 480

#define HEIGHT 320

class testApp : public ofBaseApp{

public:

// initialization

void setup();

// main loop of update/draw/update/draw/update/draw...

void update();

void draw();

// mouse/keyboard callbacks

void keyPressed (int key);

void keyReleased(int key);

void mouseMoved(int x, int y );

void mouseDragged(int x, int y, int button);

void mousePressed(int x, int y, int button);

void mouseReleased(int x, int y, int button);

// window callbacks

void windowResized(int w, int h);

void dragEvent(ofDragInfo dragInfo);

void gotMessage(ofMessage msg);

// for scaling our app for iphone/ipad

float scaleX, scaleY;

// single instance of our padButton class

// padButton button1;

// a vector is a c-standard library implementation of an array

// this allows us to create multiple buttons

vector<padButton> buttons;

};

testApp.cpp

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

ofSetWindowShape(WIDTH, HEIGHT);

scaleX = WIDTH / 1024.0;

scaleY = HEIGHT / 768.0;

// we allow our vector to have 4 padButtons, which we index from 0 - 3

buttons.resize(4);

// the first index, we setup our button by calling the following methods

buttons[0].setPosition(0, 0);

buttons[0].setSize(200, 200);

buttons[0].loadImages("button.png", "button-down.png");

// and so on...

buttons[1].setPosition(200, 0);

buttons[1].setSize(200, 200);

buttons[1].loadImages("button.png", "button-down.png");

buttons[2].setPosition(400, 0);

buttons[2].setSize(200, 200);

buttons[2].loadImages("button.png", "button-down.png");

buttons[3].setPosition(600, 0);

buttons[3].setSize(200, 200);

buttons[3].loadImages("button.png", "button-down.png");

/*

button1.setPosition(0, 0);

button1.setSize(200, 200);

button1.loadImages("button.png", "button-down.png");

*/

//buttons.push_back(button1);

/*

button_image_up.loadImage("button.png");

button_image_down.loadImage("button-down.png");

button_x = 0;

button_y = 0;

button_width = 200;

button_height = 200;

button_state = NORMAL;

*/

}

//--------------------------------------------------------------

void testApp::update(){

}

//--------------------------------------------------------------

void testApp::draw(){

// for scaling our whole canvas

ofScale(scaleX, scaleY);

// let's draw all of our buttons

buttons[0].draw();

buttons[1].draw();

buttons[2].draw();

buttons[3].draw();

//button1.draw();

/*

// allow alpha transparency

ofEnableAlphaBlending();

if(button_state == NORMAL)

{

button_image_up.draw(button_x,

button_y,

button_width,

button_height);

}

else

{

button_image_down.draw(button_x,

button_y);

}

// ok done w/ alpha blending

ofDisableAlphaBlending();

*/

}

//--------------------------------------------------------------

void testApp::keyPressed(int key){

}

//--------------------------------------------------------------

void testApp::keyReleased(int key){

}

//--------------------------------------------------------------

void testApp::mouseMoved(int x, int y ){

}

//--------------------------------------------------------------

void testApp::mouseDragged(int x, int y, int button){

}

//--------------------------------------------------------------

void testApp::mousePressed(int x, int y, int button){

/*

if( x > button_x && y > button_y

&& x < (button_x + button_width)

&& y < (button_y + button_height) )

{

button_state = BUTTON_DOWN;

}

*/

// and interaction callbacks, which we must scale for different devices

buttons[0].pressed(x / scaleX, y / scaleY);

buttons[1].pressed(x / scaleX, y / scaleY);

buttons[2].pressed(x / scaleX, y / scaleY);

buttons[3].pressed(x / scaleX, y / scaleY);

//button1.pressed(x / scaleX, y / scaleY);

}

//--------------------------------------------------------------

void testApp::mouseReleased(int x, int y, int button){

/*

button_state = NORMAL;

*/

// and interaction callbacks, which we must scale for different devices

buttons[0].released(x / scaleX, y / scaleY);

buttons[1].released(x / scaleX, y / scaleY);

buttons[2].released(x / scaleX, y / scaleY);

buttons[3].released(x / scaleX, y / scaleY);

//button1.released(x / scaleX, y / scaleY);

}

//--------------------------------------------------------------

void testApp::windowResized(int w, int h){

}

//--------------------------------------------------------------

void testApp::gotMessage(ofMessage msg){

}

//--------------------------------------------------------------

void testApp::dragEvent(ofDragInfo dragInfo){

}

padButton.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

// include file only once

#pragma once

// include openframeworks

#include "ofMain.h"

// declare the padbutton class and methods

class padButton {

public:

// enumerators allow us to assign more interesting names to values of an integer

// we could use an integer to the same effect,

// e.g. "int button_state = 0", when our button is down

// and "int button_state = 1", when our button is normal,

// but enumerators allow us to instead say

// "BUTTON_STATE button_state = BUTTON_DOWN", when our button is down,

// "BUTTON_STATE button_state = NORMAL", when our button is normal.

enum BUTTON_STATE {

BUTTON_DOWN,

NORMAL

};

// default constructor - no parameters, no return type

padButton();

// methods which our button class will define

// one for loading images for each of the button states

void loadImages(string state_normal, string state_down);

// setters, to set internal variables

// the position

void setPosition(int x, int y);

// the size

void setSize(int w, int h);

// drawing the buttons

void draw();

// and interaction with the button

void pressed(int x, int y);

void released(int x, int y);

private:

// images for drawing

ofImage button_image_normal, button_image_down;

// our position

int button_x, button_y;

// size

int button_width, button_height;

// and internal state of the button

BUTTON_STATE button_state;

};

padButton.cpp

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include <iostream>

#include "padButton.h"

// default constructor definition

padButton::padButton()

{

// by default, we have a very boring button

button_x = 0;

button_y = 0;

button_width = 0;

button_height = 0;

button_state = NORMAL;

}

void padButton::loadImages(string state_normal, string state_down)

{

// load the images for our buttons

button_image_normal.loadImage(state_normal);

button_image_down.loadImage(state_down);

}

void padButton::setPosition(int x, int y)

{

// set our internal variables

button_x = x;

button_y = y;

}

void padButton::setSize(int w, int h)

{

// set our internal variables

button_width = w;

button_height = h;

}

void padButton::draw()

{

// allow alpha transparency

ofEnableAlphaBlending();

// if our button is normal

if(button_state == NORMAL)

{

// draw the normal button image

button_image_normal.draw(button_x,

button_y,

button_width,

button_height);

}

else

{

// draw the down image

button_image_down.draw(button_x,

button_y);

}

// ok done w/ alpha blending

ofDisableAlphaBlending();

}

void padButton::pressed(int x, int y)

{

// compound boolean expressions to determine,

// is our x,y input within the bounds of the button

// we have to check the left, the top, the right, and the bottom sides

// of the button, respectively.

if( x > button_x && y > button_y

&& x < (button_x + button_width)

&& y < (button_y + button_height) )

{

button_state = BUTTON_DOWN;

}

}

void padButton::released(int x, int y)

{

// ok back to normal

button_state = NORMAL;

}

Week 3: For-loops, iOS, & Multitouch

Introduction

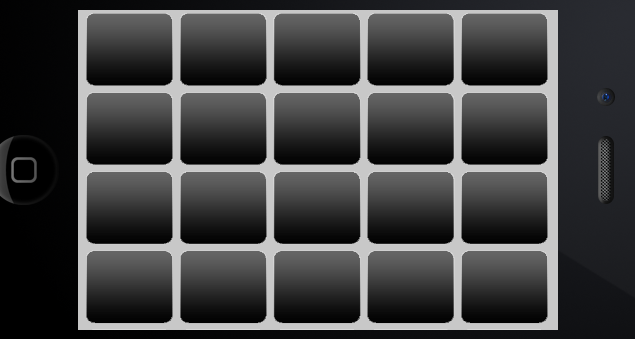

This week we explored the use of for-loops for minimizing our button code, and tried drawing a a single row of buttons comprised of many columns. We then explored nested-for loops and tried drawing many rows of buttons, still comprised of many columns. The result was a square matrix of buttons. Our next step was to explore multi-touch functionality which meant finally moving our code to compile on an iOS device. To those students that I was unable to get an iOS account for: the V&A is currently working on getting an account for us. Yet, everyone will still be able to run their code in the iPhone or iPad simulator, an emulator of the iPhone/iPad!

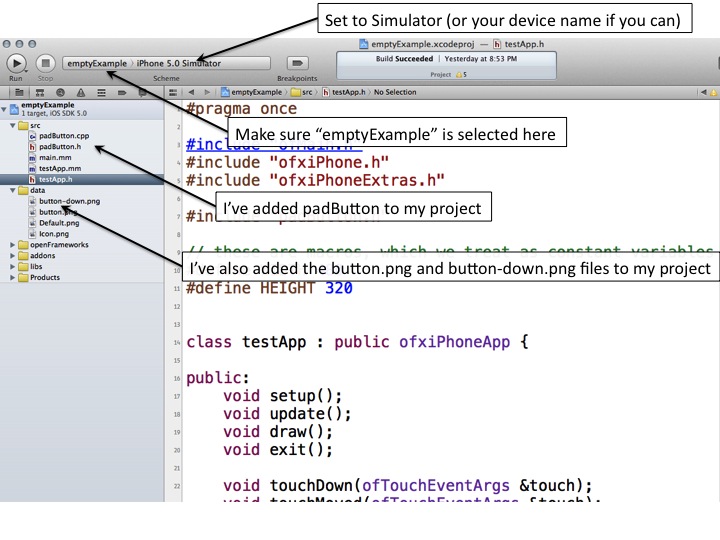

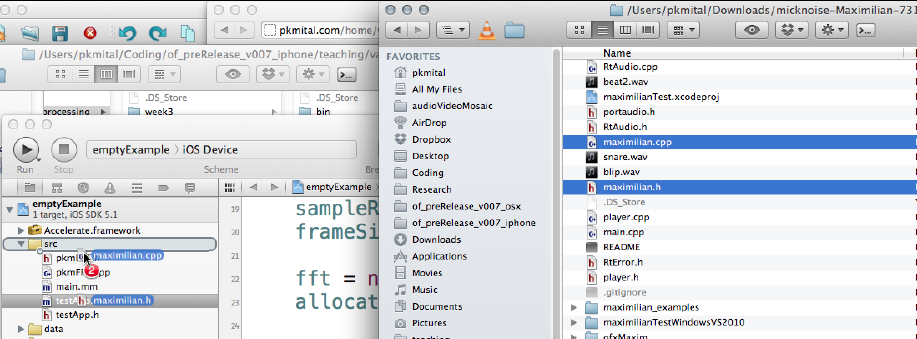

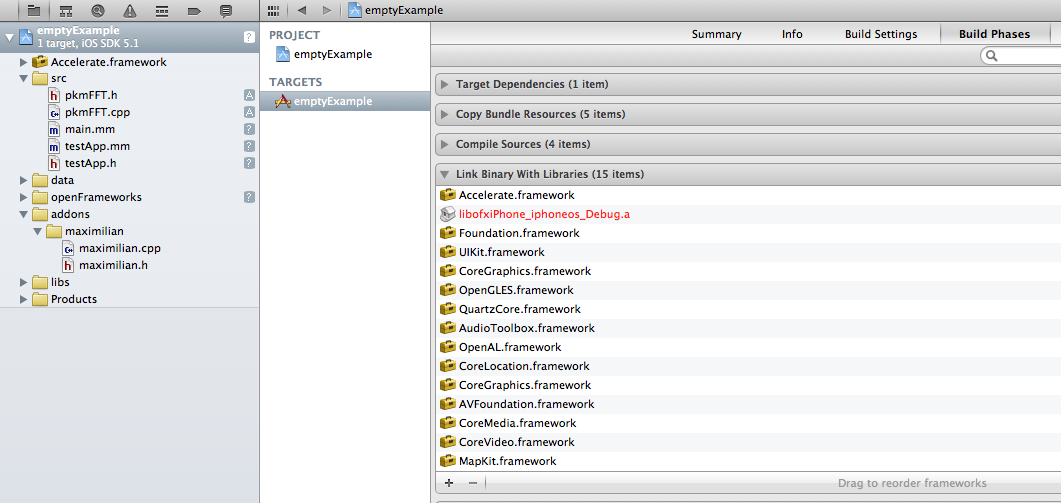

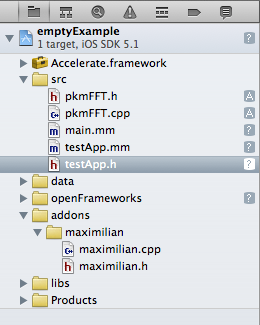

When developing for iOS devices with openFrameworks, make sure you have downloaded the openFrameworks iOS version here: openFrameworks download page. Up until now, we were working with the OSX version of openFrameworks. Though the iOS version allows us to write very similar code but compile for an iOS device. Try running the “emptyExample” inside of the iOS openFrameworks download. This is in a folder “apps/iPhoneExamples/emptyExample”. Now try copying this folder to a new one, maintaining the directory hierarchy as we’ve done in previous applications, and rewrite our padButton code to run on the iPhone simulator. You’ll need to add the padButton.h and padButton.cpp files to your “src” directory, as well as drag them into XCode, so that your project knows about them. Also, you need to add the “button.png” and “button-down.png” files to your “data” folder, and also drag these into your XCode project. This is important since your device will need these files. See the figure below and notice the files I have included in my project. I’ve also copied the code below.

For your homework, please make sure you have this running at least in the Simulator. As well, try exploring other configurations of buttons and the multi-touch functionality.

Button Pads iOS

testApp.h

#pragma once

#include "ofMain.h"

#include "ofxiPhone.h"

#include "ofxiPhoneExtras.h"

#include "padButton.h"

// these are macros, which we treat as constant variables

#define WIDTH 480

#define HEIGHT 320

class testApp : public ofxiPhoneApp {

public:

void setup();

void update();

void draw();

void exit();

void touchDown(ofTouchEventArgs &touch);

void touchMoved(ofTouchEventArgs &touch);

void touchUp(ofTouchEventArgs &touch);

void touchDoubleTap(ofTouchEventArgs &touch);

void touchCancelled(ofTouchEventArgs &touch);

void lostFocus();

void gotFocus();

void gotMemoryWarning();

void deviceOrientationChanged(int newOrientation);

// for scaling our app for iphone/ipad

float scaleX, scaleY;

// single instance of our padButton class

// padButton button1;

// a vector is a c-standard library implementation of an array

// this allows us to create multiple buttons

vector<padButton> buttons;

int numButtons;

};

testApp.mm

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

// register touch events

ofRegisterTouchEvents(this);

// initialize the accelerometer

ofxAccelerometer.setup();

//iPhoneAlerts will be sent to this.

ofxiPhoneAlerts.addListener(this);

//If you want a landscape oreintation

//iPhoneSetOrientation(OFXIPHONE_ORIENTATION_LANDSCAPE_RIGHT);

ofSetWindowShape(WIDTH, HEIGHT);

scaleX = WIDTH / 1024.0;

scaleY = HEIGHT / 768.0;

numButtons = 5;

// we allow our vector to have 4 padButtons, which we index from 0 - 3

buttons.resize(numButtons * numButtons);

// use a nested loop to initialize our buttons, setting their positions as a square matrix

// - this outer-loop iterates over ROWS

for (int j = 0; j < numButtons; j++)

{

// - this inner-loop iterates over COLUMNS

for (int i = 0; i < numButtons; i = i + 1)

{

// notice how we use the loop variables, i and j, in setting the x,y positions of each button

buttons[j * numButtons + i].setPosition(200 * i, 200 * j);

buttons[j * numButtons + i].setSize(200, 200);

buttons[j * numButtons + i].loadImages("button.png", "button-down.png");

}

}

}

//--------------------------------------------------------------

void testApp::update(){

}

//--------------------------------------------------------------

void testApp::draw(){

// for scaling our whole canvas

ofScale(scaleX, scaleY);

// let's draw all of our buttons

for (int i = 0; i < numButtons * numButtons; i++) {

buttons[i].draw();

}

}

//--------------------------------------------------------------

void testApp::exit(){

}

//--------------------------------------------------------------

void testApp::touchDown(ofTouchEventArgs &touch){

// and interaction callbacks, which we must scale for different devices

for (int i = 0; i < numButtons * numButtons; i++) {

buttons[i].pressed(touch.x / scaleX, touch.y / scaleY);

}

}

//--------------------------------------------------------------

void testApp::touchMoved(ofTouchEventArgs &touch){

}

//--------------------------------------------------------------

void testApp::touchUp(ofTouchEventArgs &touch){

// and interaction callbacks, which we must scale for different devices

for (int i = 0; i < numButtons * numButtons; i++) {

buttons[i].released(touch.x / scaleX, touch.y / scaleX);

}

}

//--------------------------------------------------------------

void testApp::touchDoubleTap(ofTouchEventArgs &touch){

}

//--------------------------------------------------------------

void testApp::lostFocus(){

}

//--------------------------------------------------------------

void testApp::gotFocus(){

}

//--------------------------------------------------------------

void testApp::gotMemoryWarning(){

}

//--------------------------------------------------------------

void testApp::deviceOrientationChanged(int newOrientation){

}

//--------------------------------------------------------------

void testApp::touchCancelled(ofTouchEventArgs& args){

}

Week 4: Recording, Playing, and Drawing Audio

Introduction

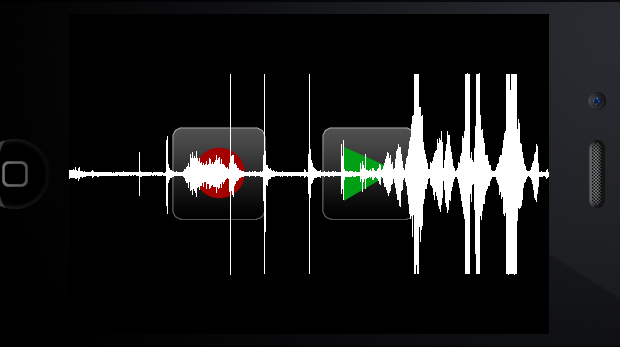

This week we explored how to get our application to have access to the microphone and speaker. We first drew samples from the microphone, then created a feedback-loop where the microphone samples were sent to the speaker, and finally learned how to record a chunk of audio using our previous button class as a “record” and “play” button. The code is copied below and requires the “iOS” version of openFrameworks. So working with the “emptyExample” of the iOS version (/of_preRelease_v007_iphone/apps/iPhoneExamples/emptyExample), try playing with the code below. Please have a read through the code and get it compiled on your own machine in your own time. Feel free to shoot me an e-mail if you have any questions. As a lot was covered, your homework is to explore the code in more depth and to understand it as much as possible.

Microphone Input

testApp.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#pragma once

#include "ofMain.h"

#include "ofxiPhone.h"

#include "ofxiPhoneExtras.h"

class testApp : public ofxiPhoneApp{

public:

void setup();

void update();

void draw();

// touch callbacks

void touchDown(ofTouchEventArgs &touch);

void touchMoved(ofTouchEventArgs &touch);

void touchUp(ofTouchEventArgs &touch);

void touchDoubleTap(ofTouchEventArgs &touch);

void touchCancelled(ofTouchEventArgs &touch);

// new method for getting the samples from the microphone

void audioIn( float * input, int bufferSize, int nChannels );

int width, height;

// variables which will help us deal with audio

int initialBufferSize;

int sampleRate;

float * buffer;

};

testApp.mm

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

// register touch events

ofRegisterTouchEvents(this);

// change our phone orientation to landscape

ofxiPhoneSetOrientation(OFXIPHONE_ORIENTATION_LANDSCAPE_RIGHT);

// variable for our audio

initialBufferSize = 512; // how many samples per "frame". each time "audioIn" gets called, we have this many samples

sampleRate = 44100; // how many samples per second. so sampleRate/initialBufferSize is our audio frame rate, or frames per second.

// we set our buffer pointer to a chunk of memory "initialBufferSize" large. this is called memory allocation.

buffer = new float[initialBufferSize];

// now we set that portion of memory to equal 0, or else it may be filled with garbage

memset(buffer, 0, initialBufferSize * sizeof(float));

// 0 output channels,

// 1 input channels

// 44100 samples per second

// 512 samples per buffer

// 4 num buffers (latency)

ofSoundStreamSetup(0, 1, this, sampleRate, initialBufferSize, 4);

ofSetFrameRate(60);

// resize our window

width = 480;

height = 320;

ofSetWindowShape(width, height);

}

//--------------------------------------------------------------

void testApp::update(){

}

//--------------------------------------------------------------

void testApp::draw(){

/*

// intialize a variable with 1 element only

float buffer;

buffer = 0;

// initialize an array of 512 elements

float buffer[512];

// can't write: buffer = 0;

buffer[0] = 0;

buffer[1] = 0;

...

...

buffer[511] = 0;

// create a pointer which points to memory.

// by default, this pointer is NULL, as in it doesn't point to any memory.

// then the right side of the equation allocates 512 elements in memory,

// and sets the pointer on the left side to "point" to that location in memory.

float *buffer = new float[512];

// we can "dereference" the pointer using the same notation as the array above

buffer[0] = 0; // equivalent to: *(buffer + 0) = 0;

buffer[1] = 0;

...

...

buffer[511] = 0;

*/

ofBackground(0);

// let's move halfway down the screen

ofTranslate(0, height/2);

// ratio of the screen size to the size of my buffer

float width_ratio = width / (float)initialBufferSize;

// change future drawing commands to be white

ofSetColor(255, 255, 255);

// we rescale our drawing to be 100x as big, since our audio waveform is only between [-1,1].

float amplitude = 100;

// lets draw the entire buffer by drawing tiny line segments from [0-1, 1-2, 2-3, 3-4, ... 510-511]

for (int i = 1; i < initialBufferSize; i++) { // we start at 1 instead of 0

ofLine((i-1)*width_ratio, // the first time this will be the x-value of the 0th buffer value

buffer[i-1]*amplitude, // the y-value of the 0th buffer value

i*width_ratio, // the x-value of the 1st buffer value

buffer[i]*amplitude); // the y-value of the 1st buffer value

}

}

//--------------------------------------------------------------

void testApp::audioIn(float * input, int bufferSize, int nChannels){

// just makes sure we didn't set "initialBufferSize" to something our audio card can't handle.

if( initialBufferSize != bufferSize ){

ofLog(OF_LOG_ERROR, "your buffer size was set to %i - but the stream needs a buffer size of %i", initialBufferSize, bufferSize);

return;

}

// we copy the samples from the "input" which is our microphone, to a variable

// our entire class knows about, buffer. this buffer has been allocated to have

// the same amount of memory as each "Frame" or "bufferSize" of audio has.

// so we copy the whole 512 sample chunk into our buffer so we can draw it.

for (int i = 0; i < bufferSize; i++){

buffer[i] = input[i];

}

}

//--------------------------------------------------------------

void testApp::touchDown(ofTouchEventArgs &touch) {}

void testApp::touchMoved(ofTouchEventArgs &touch) {}

void testApp::touchUp(ofTouchEventArgs &touch) {}

void testApp::touchDoubleTap(ofTouchEventArgs &touch) {}

void testApp::touchCancelled(ofTouchEventArgs &touch) {}

Recording Sound

For this example, we want to record a bit of sound using our button class to help us. We need to copy the button classes files (padButton.h/padButton.cpp) to our project, and also remember to add the images (button.png/button-down.png) to our data folder. We also modified our button class so that the “pressed” and “released” functions return a boolean value. This code is copied below.

test.App.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#pragma once

#include "ofMain.h"

#include "ofxiPhone.h"

#include "ofxiPhoneExtras.h"

#include "padButton.h"

class testApp : public ofxiPhoneApp{

public:

void setup();

void update();

void draw();

void touchDown(ofTouchEventArgs &touch);

void touchMoved(ofTouchEventArgs &touch);

void touchUp(ofTouchEventArgs &touch);

void touchDoubleTap(ofTouchEventArgs &touch);

void touchCancelled(ofTouchEventArgs &touch);

void audioIn( float * input, int bufferSize, int nChannels );

void audioOut( float * output, int bufferSize, int nChannels );

// our screen size

int width, height;

// variable for audio

int initialBufferSize;

int sampleRate;

// this vector will store our audio recording

vector<float> buffer;

// frame will tell us "what chunk of audio are we currently playing back".

// as we record and play back in "chunks" also called "frames", we will need

// to keep track of which frame we are playing during playback

int frame;

// frame will run until it hits the final recorded frame, which is numFrames

// we increment this value every time we record a new frame of audio

int numFrames;

// our buttons for user interaction

padButton button_play, button_record;

// determined based on whether the user has pressed the play or record buttons

bool bRecording, bPlaying;

};

testApp.mm

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

// register touch events

ofRegisterTouchEvents(this);

ofxiPhoneSetOrientation(OFXIPHONE_ORIENTATION_LANDSCAPE_RIGHT);

//for some reason on the iphone simulator 256 doesn't work - it comes in as 512!

//so we do 512 - otherwise we crash

initialBufferSize = 512;

sampleRate = 44100;

//buffer = new float[initialBufferSize];

//memset(buffer, 0, initialBufferSize * sizeof(float));

// 1 output channels, <-- different from the previous example, as now we want to playback

// 1 input channels

// 44100 samples per second

// 512 samples per buffer

// 4 num buffers (latency)

ofSoundStreamSetup(1, 1, this, sampleRate, initialBufferSize, 4);

ofSetFrameRate(60);

// we initialize our two buttons to act as a play and a record button.

button_record.loadImages("button.png", "button-down.png");

button_play.loadImages("button.png", "button-down.png");

button_record.setPosition(100, 100);

button_record.setSize(100, 100);

button_play.setPosition(250, 100);

button_play.setSize(100, 100);

// initially not recording

bRecording = false;

bPlaying = false;

// which audio frame am i currently playing back

frame = 0;

// how many audio frames did we record?

numFrames = 0;

width = 480;

height = 320;

ofSetWindowShape(width, height);

}

//--------------------------------------------------------------

void testApp::update(){

}

//--------------------------------------------------------------

void testApp::draw(){

ofBackground(0);

// draw our buttons

button_record.draw();

button_play.draw();

ofTranslate(0, height/2);

// let's output a string on the screen that helps us determine what the state of the program is

if (bPlaying) {

ofDrawBitmapString("Playing: True", 20,20);

}

else {

ofDrawBitmapString("Playing: False", 20,20);

}

if (bRecording) {

ofDrawBitmapString("Recording: True", 20,40);

}

else {

ofDrawBitmapString("Recording: False", 20,40);

}

}

//--------------------------------------------------------------

void testApp::audioOut(float * output, int bufferSize, int nChannels){

// if we are playing back audio (if the user is pressing the play button)

if(bPlaying)

{

// we set the output to be our recorded buffer

for (int i = 0; i < bufferSize; i++){

// so we have to access the current "playback frame" which is a variable

// "frame". this variable helps us determine which frame we should play back.

// because one frame is only 512 samples, or 1/90th of a second of audio, we would like

// to hear more than just that one frame. so we playback not just the first frame,

// but every frame after that... after 90 frames of audio, we will have heard

// 1 second of the recording...

output[i] = buffer[i + frame*bufferSize];

}

// we have to increase our frame counter in order to hear farther into the audio recording

frame = (frame + 1) % numFrames;

}

// else don't output anything to the speaker

else {

memset(output, 0, nChannels * bufferSize * sizeof(float));

}

}

//--------------------------------------------------------------

void testApp::audioIn(float * input, int bufferSize, int nChannels){

if( initialBufferSize != bufferSize ){

ofLog(OF_LOG_ERROR, "your buffer size was set to %i - but the stream needs a buffer size of %i", initialBufferSize, bufferSize);

return;

}

// if we are recording

if(bRecording)

{

// let's add the current frame of audio input to our recording buffer. this is 512 samples.

// (note: another way to do this is to copy the whole chunk of memory using memcpy)

for (int i = 0; i < bufferSize; i++)

{

// we will add a sample at a time to the back of the buffer, increasing the size of "buffer"

buffer.push_back(input[i]);

}

// we also need to keep track of how many audio "frames" we have. this is how many times

// we have recorded a chunk of 512 samples. we refer to that chunk of 512 samples as 1 frame.

numFrames++;

}

// otherwise we set the input to 0

else

{

// set the chunk in memory pointed to by "input" to 0. the

// size of the chunk is the 3rd argument.

memset(input, 0, nChannels * bufferSize * sizeof(float));

}

}

//--------------------------------------------------------------

void testApp::touchDown(ofTouchEventArgs &touch){

//bRecording = !bRecording;

// NOTE: we have modified our button class to return true or false when the button was pressed

// we set playing or recording to be true depending on whether the user pressed that button

bPlaying = button_play.pressed(touch.x, touch.y);

bRecording = button_record.pressed(touch.x, touch.y);

}

//--------------------------------------------------------------

void testApp::touchMoved(ofTouchEventArgs &touch){

}

//--------------------------------------------------------------

void testApp::touchUp(ofTouchEventArgs &touch){

// we then user stops pressing the button, we are no longer playing or recording

bPlaying = !button_play.released(touch.x, touch.y);

bRecording = !button_record.released(touch.x, touch.y);

}

//--------------------------------------------------------------

void testApp::touchDoubleTap(ofTouchEventArgs &touch){

}

//--------------------------------------------------------------

void testApp::touchCancelled(ofTouchEventArgs& args){

}

padButton.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

// include file only once

#pragma once

// include openframeworks

#include "ofMain.h"

// declare the padbutton class and methods

class padButton {

public:

// enumerators allow us to assign more interesting names to values of an integer

// we could use an integer to the same effect,

// e.g. "int button_state = 0", when our button is down

// and "int button_state = 1", when our button is normal,

// but enumerators allow us to instead say

// "BUTTON_STATE button_state = BUTTON_DOWN", when our button is down,

// "BUTTON_STATE button_state = NORMAL", when our button is normal.

enum BUTTON_STATE {

BUTTON_DOWN,

NORMAL

};

// default constructor - no parameters, no return type

padButton();

// methods which our button class will define

// one for loading images for each of the button states

void loadImages(string state_normal, string state_down);

// setters, to set internal variables

// the position

void setPosition(int x, int y);

// the size

void setSize(int w, int h);

// drawing the buttons

void draw();

// and interaction with the button

bool pressed(int x, int y);

bool released(int x, int y);

private:

// images for drawing

ofImage button_image_normal, button_image_down;

// our position

int button_x, button_y;

// size

int button_width, button_height;

// and internal state of the button

BUTTON_STATE button_state;

};

padButton.cpp

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include <iostream>

#include "padButton.h"

// default constructor definition

padButton::padButton()

{

// by default, we have a very boring button

button_x = 0;

button_y = 0;

button_width = 0;

button_height = 0;

button_state = NORMAL;

}

void padButton::loadImages(string state_normal, string state_down)

{

// load the images for our buttons

button_image_normal.loadImage(state_normal);

button_image_down.loadImage(state_down);

}

void padButton::setPosition(int x, int y)

{

// set our internal variables

button_x = x;

button_y = y;

}

void padButton::setSize(int w, int h)

{

// set our internal variables

button_width = w;

button_height = h;

}

void padButton::draw()

{

// allow alpha transparency

ofEnableAlphaBlending();

// if our button is normal

if(button_state == NORMAL)

{

// draw the normal button image

button_image_normal.draw(button_x,

button_y,

button_width,

button_height);

}

else

{

// draw the down image

button_image_down.draw(button_x,

button_y,

button_width,

button_height);

}

// ok done w/ alpha blending

ofDisableAlphaBlending();

}

bool padButton::pressed(int x, int y)

{

// compound boolean expressions to determine,

// is our x,y input within the bounds of the button

// we have to check the left, the top, the right, and the bottom sides

// of the button, respectively.

if( x > button_x && y > button_y

&& x < (button_x + button_width)

&& y < (button_y + button_height) )

{

button_state = BUTTON_DOWN;

// return yes since the user pressed the button

return true;

}

else {

// no the user didn't press the button

return false;

}

}

bool padButton::released(int x, int y)

{

// ok back to normal

button_state = NORMAL;

// we always return true since that is how our buttons work.

return true;

}

Week 5: Building User Interaction

Introduction

This week we furthered our audio input/output example by exploring drawing a recorded buffer. We then saw how to deal with the program flow with our buttons by using “booleans”. These booleans helped us understand the state of the program and changed the way the program would function. When you have any user interaction, you have to make sure you account for all the possible states the program can get into, as the user will always try everything possible. A common practice is to assume the user “will always do the wrong thing” and make sure you code for conditions that tell the user, e.g., you should first record something before playing back the recording.

Homework: create a tape-cassette app which rewinds, goes fast forwards, records, plays backs, maybe also plays while fast forwarding or rewinding. Implement buttons for each of these and create your own aesthetic using graphics.

Tape Cassette Player

Make sure you continue to use the iPhone branch of the openFrameworks distribution. I’ve also changed the images for the record and play buttons. You can download the new images to put into your “bin/data” folder here [buttons.zip].

testApp.h

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#pragma once

#include "ofMain.h"

#include "ofxiPhone.h"

#include "ofxiPhoneExtras.h"

#include "padButton.h"

class testApp : public ofxiPhoneApp{

public:

void setup();

void update();

void draw();

void touchDown(ofTouchEventArgs &touch);

void touchMoved(ofTouchEventArgs &touch);

void touchUp(ofTouchEventArgs &touch);

void touchDoubleTap(ofTouchEventArgs &touch);

void touchCancelled(ofTouchEventArgs &touch);

void audioIn( float * input, int bufferSize, int nChannels );

void audioOut( float * output, int bufferSize, int nChannels );

// our screen size

int width, height;

// variable for audio

int initialBufferSize;

int sampleRate;

// this vector will store our audio recording

vector<float> buffer;

// frame will tell us "what chunk of audio are we currently playing back".

// as we record and play back in "chunks" also called "frames", we will need

// to keep track of which frame we are playing during playback

int frame;

// frame will run until it hits the final recorded frame, which is numFrames

// we increment this value every time we record a new frame of audio

int numFrames;

// our buttons for user interaction

padButton button_play, button_record;

// determined based on whether the user has pressed the play or record buttons

bool bRecording, bPlaying, bRecorded, bDoubleTapped;

};

testApp.mm

/*

* Created by Parag K. Mital - http://pkmital.com

* Contact: parag@pkmital.com

*

* Copyright 2011 Parag K. Mital. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person

* obtaining a copy of this software and associated documentation

* files (the "Software"), to deal in the Software without

* restriction, including without limitation the rights to use,

* copy, modify, merge, publish, distribute, sublicense, and/or sell

* copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following

* conditions:

*

* The above copyright notice and this permission notice shall be

* included in all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

* EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

* OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

* NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

* HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

* WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

* OTHER DEALINGS IN THE SOFTWARE.

*/

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

// register touch events

ofRegisterTouchEvents(this);

ofxiPhoneSetOrientation(OFXIPHONE_ORIENTATION_LANDSCAPE_RIGHT);

//for some reason on the iphone simulator 256 doesn't work - it comes in as 512!

//so we do 512 - otherwise we crash

initialBufferSize = 512;

sampleRate = 44100;

//buffer = new float[initialBufferSize];

//memset(buffer, 0, initialBufferSize * sizeof(float));

// 1 output channels, <-- different from the previous example, as now we want to playback

// 1 input channels

// 44100 samples per second

// 512 samples per buffer = 1 frame

// 4 num buffers (latency)

ofSoundStreamSetup(1, 1, this, sampleRate, initialBufferSize, 4);

ofSetFrameRate(60);

// we initialize our two buttons to act as a play and a record button.

button_record.loadImages("button-record.png", "button-record-down.png");

button_play.loadImages("button-play.png", "button-play-down.png");

button_record.setPosition(100, 110);

button_record.setSize(100, 100);

button_play.setPosition(250, 110);

button_play.setSize(100, 100);

// initially not recording

bRecording = false;

bPlaying = false;

bRecorded = false;

bDoubleTapped = false;

// which audio frame am i currently playing back

frame = 0;

// how many audio frames did we record?

numFrames = 0;

width = 480;

height = 320;

ofSetWindowShape(width, height);

}

//--------------------------------------------------------------

void testApp::update(){

}

//--------------------------------------------------------------

void testApp::draw(){

ofBackground(0);

// draw our buttons

// change future drawing commands to be white

ofSetColor(255, 255, 255, 200);

button_record.draw();

button_play.draw();

if(bRecorded)

{

// move the drawing down halfway

ofTranslate(0, height/2);

// this is going to store how many pixels we multiply our drawing by

float amplitude = 100;

// conversion from samples to pixels

float width_ratio = width / (float)(initialBufferSize*numFrames);

// draw our audio buffer

for (int i = 1; i < initialBufferSize*numFrames; i++)

{

ofLine((i - 1) * width_ratio,

buffer[(i-1)] * amplitude,

i * width_ratio,

buffer[i] * amplitude);

}

}

/*

if (bRecorded) {

ofPushMatrix();

// let's move halfway down the screen

ofTranslate(0, height/2);

// ratio of the screen size to the size of my buffer

float width_ratio = width / (float)(numFrames*initialBufferSize);

// change future drawing commands to be white

ofSetColor(255, 255, 255);

// we rescale our drawing to be 100x as big, since our audio waveform is only between [-1,1].

float amplitude = 100;

int step = numFrames*initialBufferSize / width;

// lets draw the entire buffer by drawing tiny line segments from [0-1, 1-2, 2-3, 3-4, ... 510-511]

for (int i = step; i < numFrames*initialBufferSize; i+=step) { // we start at 1 instead of 0

ofLine((i-step)*width_ratio, // the first time this will be the x-value of the 0th buffer value

buffer[i-step]*amplitude, // the y-value of the 0th buffer value

i*width_ratio, // the x-value of the 1st buffer value

buffer[i]*amplitude); // the y-value of the 1st buffer value

}

ofSetColor(255, 20, 20);

ofLine(frame*initialBufferSize*width_ratio, -amplitude, frame*initialBufferSize*width_ratio, amplitude);

ofPopMatrix();

}

*/

/*

// let's output a string on the screen that helps us determine what the state of the program is

if (bPlaying) {

ofDrawBitmapString("Playing: True", 20,20);

}

else {

ofDrawBitmapString("Playing: False", 20,20);

}

if (bRecording) {

ofDrawBitmapString("Recording: True", 20,40);

}

else {

ofDrawBitmapString("Recording: False", 20,40);

}

*/

}

//--------------------------------------------------------------

void testApp::audioOut(float * output, int bufferSize, int nChannels){

// if we are playing back audio (if the user is pressing the play button)

if(bPlaying && bRecorded)

{

// we set the output to be our recorded buffer

for (int i = 0; i < bufferSize; i++){

// so we have to access the current "playback frame" which is a variable

// "frame". this variable helps us determine which frame we should play back.

// because one frame is only 512 samples, or 1/90th of a second of audio, we would like

// to hear more than just that one frame. so we playback not just the first frame,

// but every frame after that... after 90 frames of audio, we will have heard

// 1 second of the recording...

output[i] = buffer[i + frame*bufferSize];

}

// we have to increase our frame counter in order to hear farther into the audio recording

frame = (frame + 1) % numFrames;

}

// else don't output anything to the speaker

else {

memset(output, 0, nChannels * bufferSize * sizeof(float));

}

}

//--------------------------------------------------------------

void testApp::audioIn(float * input, int bufferSize, int nChannels){

if( initialBufferSize != bufferSize ){

ofLog(OF_LOG_ERROR, "your buffer size was set to %i - but the stream needs a buffer size of %i", initialBufferSize, bufferSize);

return;

}

// if we are recording

if(bRecording)

{

// let's add the current frame of audio input to our recording buffer. this is 512 samples.

// (note: another way to do this is to copy the whole chunk of memory using memcpy)

for (int i = 0; i < bufferSize; i++)

{

// we will add a sample at a time to the back of the buffer, increasing the size of "buffer"

buffer.push_back(input[i]);

}

// we also need to keep track of how many audio "frames" we have. this is how many times

// we have recorded a chunk of 512 samples. we refer to that chunk of 512 samples as 1 frame.

numFrames++;

bRecorded = true;

}

// otherwise we set the input to 0

else

{

// set the chunk in memory pointed to by "input" to 0. the

// size of the chunk is the 3rd argument.

memset(input, 0, nChannels * bufferSize * sizeof(float));

}

}

//--------------------------------------------------------------

void testApp::touchDown(ofTouchEventArgs &touch){

//bRecording = !bRecording;

// NOTE: we have modified our button class to return true or false when the button was pressed

// we set playing or recording to be true depending on whether the user pressed that button

bPlaying = button_play.pressed(touch.x, touch.y);

bRecording = button_record.pressed(touch.x, touch.y);

}

//--------------------------------------------------------------

void testApp::touchMoved(ofTouchEventArgs &touch){

}

//--------------------------------------------------------------

void testApp::touchUp(ofTouchEventArgs &touch){

if (!bDoubleTapped) {

// we then user stops pressing the button, we are no longer playing or recording

if(button_play.released(touch.x, touch.y))

bPlaying = false;

if(button_record.released(touch.x, touch.y))

bRecording = false;

}

bDoubleTapped = false;

}

//--------------------------------------------------------------

void testApp::touchDoubleTap(ofTouchEventArgs &touch){

bPlaying = button_play.pressed(touch.x, touch.y);

bRecording = button_record.pressed(touch.x, touch.y);

bDoubleTapped = true;

}

//--------------------------------------------------------------

void testApp::touchCancelled(ofTouchEventArgs& args){

}

Week 6: Pickers, Images, Cameras

Introduction

We visited image and camera interaction this week by looking at (1) the openFrameworks extension of UIImagePicker, ofxiPhoneImagePicker, (2) the ofImage class, (3) the ofVideoGrabber and ofVideoPlayer classes, and (4) the ofxCvColorImage and ofxCvGrayscaleImage classes. Ultimately, we were interested in the pixels that represent the image in any of these classes, as moving those pixels from one class object to another enabled us to do different things. For instance, moving the pixels from an ofVideoGrabber to an ofxCvColorImage allowed us to convert the pixels to grayscale, storing them in an ofxCvGrayscaleImage. With the ofxCvGrayscaleImage, we were able to draw the grayscale version of the image on the screen. Of course, there are much more interesting things we can do with these classes.

Just like when we visited audio, we saw that this pixel information was defined by a pointer to memory, where the size of the memory was determined by the size of the image. The type of this memory is generally not a float like in audio, but an unsigned char, which is 8-bits or 256 possible values of information compared to the floating-point representation of 32-bits. We could access the pixels of any of these objects using a function called getPixels() (though not in the ofxiPhoneImagePicker class for some odd reason, we had to use just a variable called pixels: myOfxiPhoneImagePicker.pixels). Moving the pixels from one object to another entailed calling the setFromPixels method of one object. So for example, converting a ofVideoGrabber to an ofxCvColorImage could be done like so: myOfxCvColorImage.setFromPixels(myOfVideoGrabber.getPixels(), widthOfImage, heightOfImage);

We also had a tour of publishing on the app-store. This requires you to do a number of steps including getting a Distribution certification, Distribution provisioning profile, creating an app id, creating the app in https://itunesconnect.apple.com/, and making sure your app is built in XCode with code signing. Once you build the app, you run the “Application Loader” stored in your /Developer/Applications folder, and submit the compressed application to Apple for approval. There is also a fairly good guide on the developer website when you visit the “iOS Provisioning Portal” and click on “Distribution”.

Homework: Try submitting an app we have already built, or extend one that we have built such as the drum pad recorders or the tape-cassette and then submit that.

Week 7: ofSoundPlayer and Accelerometer; GPS, MapKit, Annotations, and Interface Builder

ofSoundPlayer and Accelerometer

We first had a look at how openFrameworks gives us the information of the iPhone/iPad accelerometer using the global variable ofxAccelerometer. Extending the TouchAndAccelExample in the iPhoneSpecificExamples folder, we extended the simple ball-physics game to incorporate sound using ofSoundPlayer. This class allows us to deal with sound without having to use the audioIn/audioOut methods, however will only provide simple routines to deal with the audio. In order to get sound playback for the ball example, we had to understand when the ball hit a wall. We incorporated a simple boolean variable in the Ball.h class to tell us this, and then created a new SonicBall class which had a Ball object inside of it. We could have also extended the Ball class using inheritance, but you can have a look at this on your own time. Note that when you create a C++ class using an iOS project, you have to make sure your “.cpp” files are renamed to “.mm” if they end up being refered to by any code using Objective-C. As a safe bet, just rename your “.cpp” files to “.mm” and it will work fine.

Ball.h

#pragma once

#define BOUNCE_FACTOR 0.7

#define ACCELEROMETER_FORCE 0.2

#define RADIUS 20

class Ball {

public:

ofPoint pos;

ofPoint vel;

ofColor col;

ofColor touchCol;

bool bDragged, bHitWall;

//----------------------------------------------------------------

void init(int id) {

bHitWall = false;

pos.set(ofRandomWidth(), ofRandomHeight(), 0);

vel.set(ofRandomf(), ofRandomf(), 0);

float val = ofRandom( 30, 100 );

col.set( val, val, val, 120 );

if( id % 3 == 0 ){

touchCol.setHex(0x809d00);

}else if( id % 3 == 1){

touchCol.setHex(0x009d88);

}else{

touchCol.setHex(0xf7941d);

}

bDragged = false;

}

//----------------------------------------------------------------

void update() {

bHitWall = false;

vel.x += ACCELEROMETER_FORCE * ofxAccelerometer.getForce().x * ofRandomuf();

vel.y += -ACCELEROMETER_FORCE * ofxAccelerometer.getForce().y * ofRandomuf(); // this one is subtracted cos world Y is opposite to opengl Y

// add vel to pos

pos += vel;

// check boundaries

if(pos.x < RADIUS) {

pos.x = RADIUS;

vel.x *= -BOUNCE_FACTOR;

bHitWall = true;

} else if(pos.x >= ofGetWidth() - RADIUS) {

pos.x = ofGetWidth() - RADIUS;

vel.x *= -BOUNCE_FACTOR;

bHitWall = true;

}

if(pos.y < RADIUS) {

pos.y = RADIUS;

vel.y *= -BOUNCE_FACTOR;

bHitWall = true;

} else if(pos.y >= ofGetHeight() - RADIUS) {

pos.y = ofGetHeight() - RADIUS;

vel.y *= -BOUNCE_FACTOR;

bHitWall = true;

}

}

//----------------------------------------------------------------

void draw() {

if( bDragged ){

ofSetColor(touchCol);

ofCircle(pos.x, pos.y, 80);

}else{

ofSetColor(col);

ofCircle(pos.x, pos.y, RADIUS);

}

}

//----------------------------------------------------------------

void moveTo(int x, int y) {

pos.set(x, y, 0);

vel.set(0, 0, 0);

}

};

SonicBall.h

Be sure to add your own sound to the “data” folder. I have grabbed one off of freesound.org.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

#pragma once

#include "Ball.h"

class SonicBall {

public:

SonicBall()

{

sound.loadSound("106727__kantouth__cartoon-bing-low.wav");

}

void init(int id)

{

ball.init(id);

}

void update()

{

ball.update();

if(ball.bHitWall)

{

sound.setSpeed(sqrt(ball.vel.x*ball.vel.x + ball.vel.y*ball.vel.y));

sound.play();

}

}

void draw()

{

ball.draw();

}

Ball ball;

ofSoundPlayer sound;

}#pragma once

#define BOUNCE_FACTOR 0.7

#define ACCELEROMETER_FORCE 0.2

#define RADIUS 20

class Ball {

public:

ofPoint pos;

ofPoint vel;

ofColor col;

ofColor touchCol;

bool bDragged, bHitWall;

//----------------------------------------------------------------

void init(int id) {

bHitWall = false;

pos.set(ofRandomWidth(), ofRandomHeight(), 0);

vel.set(ofRandomf(), ofRandomf(), 0);

float val = ofRandom( 30, 100 );

col.set( val, val, val, 120 );

if( id % 3 == 0 ){

touchCol.setHex(0x809d00);

}else if( id % 3 == 1){

touchCol.setHex(0x009d88);

}else{

touchCol.setHex(0xf7941d);

}

bDragged = false;

}

//----------------------------------------------------------------

void update() {

bHitWall = false;

vel.x += ACCELEROMETER_FORCE * ofxAccelerometer.getForce().x * ofRandomuf();

vel.y += -ACCELEROMETER_FORCE * ofxAccelerometer.getForce().y * ofRandomuf(); // this one is subtracted cos world Y is opposite to opengl Y

// add vel to pos

pos += vel;

// check boundaries

if(pos.x < RADIUS) {

pos.x = RADIUS;

vel.x *= -BOUNCE_FACTOR;

bHitWall = true;

} else if(pos.x >= ofGetWidth() - RADIUS) {

pos.x = ofGetWidth() - RADIUS;

vel.x *= -BOUNCE_FACTOR;

bHitWall = true;

}

if(pos.y < RADIUS) {

pos.y = RADIUS;

vel.y *= -BOUNCE_FACTOR;

bHitWall = true;

} else if(pos.y >= ofGetHeight() - RADIUS) {

pos.y = ofGetHeight() - RADIUS;

vel.y *= -BOUNCE_FACTOR;

bHitWall = true;

}

}

//----------------------------------------------------------------

void draw() {

if( bDragged ){

ofSetColor(touchCol);

ofCircle(pos.x, pos.y, 80);

}else{

ofSetColor(col);

ofCircle(pos.x, pos.y, RADIUS);

}

}

//----------------------------------------------------------------

void moveTo(int x, int y) {

pos.set(x, y, 0);

vel.set(0, 0, 0);

}

};

SonicBall.h

Be sure to add your own sound to the “data” folder. I have grabbed one off of freesound.org.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

#pragma once

#include "Ball.h"

class SonicBall {

public:

SonicBall()

{

sound.loadSound("106727__kantouth__cartoon-bing-low.wav");

}

void init(int id)

{

ball.init(id);

}

void update()

{

ball.update();

if(ball.bHitWall)

{

sound.setSpeed(sqrt(ball.vel.x*ball.vel.x + ball.vel.y*ball.vel.y));

sound.play();

}

}

void draw()

{

ball.draw();

}

Ball ball;

ofSoundPlayer sound;

}

testApp.h

We just have to rename the portion in testApp that deal with “Ball” to now deal with “SonicBall”, as we mimicked most of the functionality (except for moveTo, but you can do this on your own very easily).

#pragma once

#include "ofMain.h"

#include "ofxiPhone.h"

#include "ofxiPhoneExtras.h"

#include "SonicBall.h"

class testApp : public ofxiPhoneApp {

public:

void setup();

void update();

void draw();

void exit();

void touchDown(int x, int y, int id);

void touchMoved(int x, int y, int id);

void touchUp(int x, int y, int id);

void touchDoubleTap(int x, int y, int id);

void touchCancelled(ofTouchEventArgs &touch);

void lostFocus();

void gotFocus();

void gotMemoryWarning();

void deviceOrientationChanged(int newOrientation);

void gotMessage(ofMessage msg);

ofImage arrow;

vector <SonicBall> balls;

};

testApp.mm

#include "testApp.h"

//--------------------------------------------------------------

void testApp::setup(){

ofBackground(225, 225, 225);

ofSetCircleResolution(80);

// register touch events

ofxRegisterMultitouch(this);

// initialize the accelerometer

ofxAccelerometer.setup();

//iPhoneAlerts will be sent to this.

ofxiPhoneAlerts.addListener(this);

balls.assign(10, SonicBall());

arrow.loadImage("arrow.png");

arrow.setAnchorPercent(1.0, 0.5);

// initialize all of the Ball particles

for(int i=0; i<balls.size(); i++){

balls[i].init(i);

}

}

//--------------------------------------------------------------

void testApp::update() {

for(int i=0; i < balls.size(); i++){

balls[i].update();

}

printf("x = %f y = %f \n", ofxAccelerometer.getForce().x, ofxAccelerometer.getForce().y);

}

//--------------------------------------------------------------

void testApp::draw() {

ofSetColor(54);

ofDrawBitmapString("Multitouch and Accel Example", 10, 20);

float angle = 180 - RAD_TO_DEG * atan2( ofxAccelerometer.getForce().y, ofxAccelerometer.getForce().x );

ofEnableAlphaBlending();

ofSetColor(255);

ofPushMatrix();

ofTranslate(ofGetWidth()/2, ofGetHeight()/2, 0);

ofRotateZ(angle);

arrow.draw(0,0);

ofPopMatrix();

ofPushStyle();

ofEnableBlendMode(OF_BLENDMODE_MULTIPLY);

for(int i = 0; i< balls.size(); i++){

balls[i].draw();

}

ofPopStyle();

}

//--------------------------------------------------------------

void testApp::exit() {

}

//--------------------------------------------------------------

void testApp::touchDown(int x, int y, int id){

// printf("touch %i down at (%i,%i)\n", id, x,y);

// balls[id].moveTo(x, y);

// balls[id].bDragged = true;

}

//--------------------------------------------------------------

void testApp::touchMoved(int x, int y, int id){

// printf("touch %i moved at (%i,%i)\n", id, x, y);

// balls[id].moveTo(x, y);

// balls[id].bDragged = true;

}

//--------------------------------------------------------------

void testApp::touchUp(int x, int y, int id){

// balls[id].bDragged = false;

// printf("touch %i up at (%i,%i)\n", id, x, y);

}

//--------------------------------------------------------------

void testApp::touchDoubleTap(int x, int y, int id){

printf("touch %i double tap at (%i,%i)\n", id, x, y);

}

//--------------------------------------------------------------

void testApp::lostFocus() {

}

//--------------------------------------------------------------

void testApp::gotFocus() {

}

//--------------------------------------------------------------

void testApp::gotMemoryWarning() {

}

//--------------------------------------------------------------

void testApp::deviceOrientationChanged(int newOrientation){

}

//--------------------------------------------------------------

void testApp::touchCancelled(ofTouchEventArgs& args){

}

//--------------------------------------------------------------

void testApp::gotMessage(ofMessage msg){

}

GPS, MapKit, Annotations, and Interface Builder

The MapKit example likely just displays a gray screen with a circle and a line on it, and no map. To get the map to show, we have to change a few things. First, find the file in /addons/ofxiPhone/src/ES1Renderer.mm and look for the function resizeFromLayer. Change the first line of code to say:

- (BOOL)resizeFromLayer:(CAEAGLLayer *)layer

{

layer.opaque = NO; // <--- CHANGE THIS LINE OF CODE

// Allocate color buffer backing based on the current layer size

//[context renderbufferStorage:GL_RENDERBUFFER_OES fromDrawable:layer];