Biography

Parag K. MITAL, Ph.D. (US) is an Indian American artist and interdisciplinary researcher working between computational arts and machine learning for nearly 20 years. He currently runs the artist studio The Garden in the Machine, Inc. based in Los Angeles, CA. His varied scientific background includes fields such as machine and deep learning, film cognition, eye-tracking studies, EEG and fMRI research. His artistic practice combines generative film experiences, augmented reality hallucinations, and expressive control of large audiovisual corpora, tackling questions of identity, memory, and the nature of perception. The balance between his scientific and arts practice allows both to reflect on each other: the science driving the theories, and the artwork re-defining the questions asked within the research.

His work has been published and exhibited internationally including the Prix Ars Electronica, Walt Disney Concert Hall, ACM Multimedia, Victoria & Albert Museum, London’s Science Museum, Oberhausen Short Film Festival, and the British Film Institute, and featured in press including BBC, NYTimes, FastCompany, and others. He has also taught at UCLA, University of Edinburgh, Goldsmiths, University of London, Dartmouth College, Srishti Institute of Art, Design and Technology, and California Institute of the Arts in both Undergraduate and Graduate levels in primarily computational arts applied courses focusing on machine learning applications. Finally, he is also a frequent collaborator with artists and cultural institutes such as Massive Attack, Sigur Rós, David Lynch, Sphere, Google, Es Devlin, and Refik Anadol Studio.

Current Work

-

2010-current

Founder

The Garden in the Machine, Inc.

Los Angeles, CA, U.S.A.

Computational arts practices incorporating applied machine learning research, generative film, image, and sound practices, and working with artists and cultural institutes such as Massive Attack, Sigur Rós, David Lynch, Google, Es Devlin, Refik Anadol Studio, LA Phil, Walt Disney Concert Hall, Boiler Room, XL Recordings, and others.

-

2020-current

Adjunct Faculty

UCLA

Los Angeles, CA, U.S.A.

Cultural Appropriation with Machine Learning is a special topics course taught in 2020 and soon again in Fall 2023 where students of the Design and Media Arts program are taught both a critical framing for approaching generative arts as well as how to integrate various tools into their own practice.

Past Work Experience

-

2017-2023

HyperSurfaces is a low cost, low power, privacy preserving technology for understanding of vibrations on objects and materials of any shape or size, bringing life to passive objects around us and merging the physical and data worlds.

-

2022-2024

AI powered music studio in the browser. Leading the research and development of AI tools for musical content creation, composition, arrangement, and synthesis.

-

2019-2020

Worked with international artists, including Martine Syms, Anna Ridler, Allison Parrish, and Paola Torres Núñez del Prado, to help integrate machine learning techniques into their artistic practice.

-

2016-2018

Director of Machine Intelligence / Senior Research Scientist

Kadenze, Inc.

Valencia, CA, U.S.A.

Built bespoke ML/DL pipeline using Python, TensorFlow, Ruby on Rails, and ELK stack for recommendation, personalization, and search of various media sources on Kadenze, Inc. Setup backend using CloudFormation, ECS, ELK stack and various frontend visualizations of trained models on Kadenze data using Kibana, Bokeh, D3.js, and Python. Built bespoke ML/DL solutions to enable analysis and auto-grading of images, sound, and code. Built, taught, and continue to deliver Kadenze’s most successful course on Deep Learning, Creative Applications of Deep Learning w/ TensorFlow, and fostered and built collaborations with Google Brain and Nvidia (including usage of their HPC cluster) to partner on the course.

-

2016-2018

-

2017

Developed bespoke neural audio synthesis algorithm for public launch of the Google Pixel 2 phone, including backend for website which served pre-trained neural audio model for stylization and synthesis and live processing of voices. Built with node.js, Redis, TensorFlow, and Google Cloud.

-

2017

Ported a model to TensorFlow to reproduce results of a Inception-based deep convolutional neural network written in another framework.

-

2015

Machine learning and signal processing of user behavior and activity patterns from GPS and smartphone motion data. MongoDB cluster computing; Mapbox; Python; Objective-C, Swift; Machine learning; Mobile signal processing.

-

2015

Augmented reality; Unity 5; Procedural audiovisual synthesis.

-

2014-2015

Exploring feature learning in audiovisual data, fMRI coding of musical and audiovisual stimuli during experienced and imagined settings, and sound and image synthesis techniques. Designed experiment for fMRI and behavioral data, collected data using 3T fMRI and PyschoPy, wrote custom pre-processing of data using AFNI/SUMA/Freesurfer using the Dartmouth Discovery supercomputing cluster, and developed methods using Univariate and Multivariate methods including Hyperalignment measures using PyMVPA. Principal Investigator: Michael Casey

-

2011

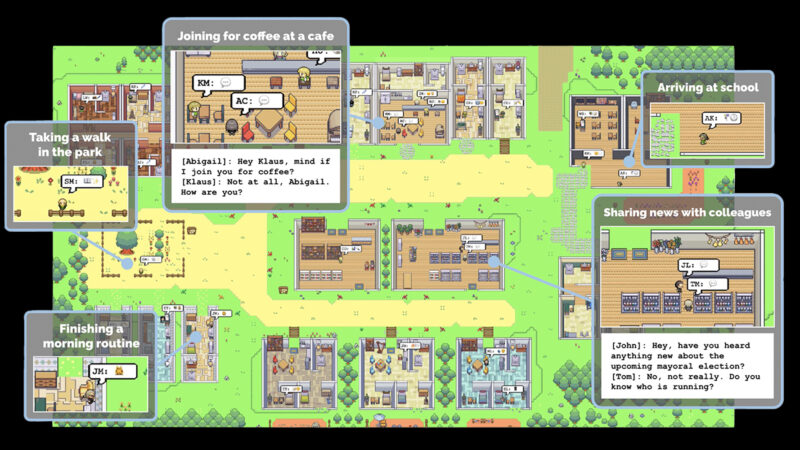

ECHOES is a technology-enhanced learning environment where 5-to-7-year-old children on the Autism Spectrum and their typically developing peers can explore and improve social and communicative skills through interacting and collaborating with virtual characters (agents) and digital objects. ECHOES provides developmentally appropriate goals and methods of intervention that are meaningful to the individual child, and prioritises communicative skills such as joint attention. Wrote custom computer vision code for calibrating behavioral measures of attention within a large format touchscreen television. Funded by the EPSRC. Principal Investigators: Oliver Lemon and Kaska Porayska-Pomsta

-

2008-2010

Research Assistant

John M. Henderson’s Visual Cognition Lab, University of Edinburgh

Investigating dynamic scene perception through computational models of eye-movements, low-level static and temporal visual features, film composition, and object and scene semantics. Wrote custom code for processing large corpus of audiovisual data, correlating the data with behavioral measures from a large collection of human subject eye-movements, and applied pattern recognition and signal processing techniques to infer the contribution of auditory and visual features and their interaction within different tasks and film editing styles. The DIEM Project. Funded by the Leverhulme Trust and ESRC. Principal Investigator: John M. Henderson

Education

-

2014

Ph.D. Arts and Computational Technologies

Goldsmiths, University of London

Thesis: Computational Audiovisual Scene Synthesis

This thesis attempts to open a dialogue around fundamental questions of perception such as: how do we represent our ongoing auditory or visual perception of the world using our brain; what could these representations explain and not explain; and how can these representations eventually be modeled by computers?

-

2008

M.Sc. Artificial Intelligence: Intelligent Robotics

University of Edinburgh

-

2007

B.Sc. Computer and Information Sciences

University of Delaware

Publications

-

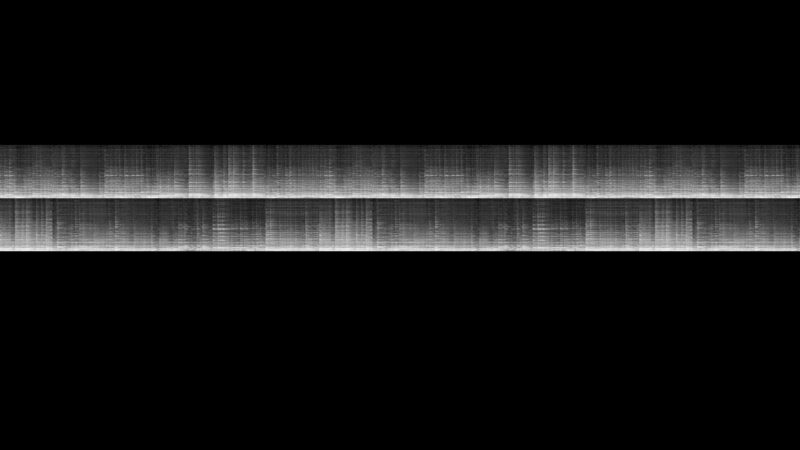

Parag K Mital. Time Domain Neural Audio Style Transfer Neural Information Processing Systems Conference 2017 (NIPS2017), https://arxiv.org/abs/1711.11160, December 3 – 9, 2017

GITHUB | ARXIV

-

Christian Frisson, Nicolas Riche, Antoine Coutrot, Charles-Alexandre Delestage, Stéphane Dupont, Onur Ferhat, Nathalie Guyader, Sidi Ahmed Mahmoudi, Matei Mancas, Parag K Mital, Alicia Prieto Echániz, François Rocca, Alexis Rochette, Willy Yvart. Auracle: how are salient cues situated in audiovisual content? eNTERFACE 2014, Bilbao, Spain, June 9 – July 4, 2014.

ONLINE | PDF

-

Parag K. Mital, Jessica Thompson, Michael Casey. How Humans Hear and Imagine Musical Scales: Decoding Absolute and Relative Pitch with fMRI. CCN 2014, Dartmouth College, Hanover, NH, USA, August 25-26, 2014.

-

Parag Kumar Mital. Audiovisual Resynthesis in an Augmented Reality. In Proceedings of the ACM International Conference on Multimedia (MM ’14). ACM, New York, NY, USA, 695-698. 2014. DOI=10.1145/2647868.2655617 http://doi.acm.org/10.1145/2647868.2655617 .

WEBSITE | ONLINE | PDF

-

Tim J. Smith, Sam Wass, Tessa Dekker, Parag K. Mital, Irati Rodriguez, Annette Karmiloff-Smith. Optimising signal-to-noise ratios in Tots TV can create adult-like viewing behaviour in infants. 2014 International Conference on Infant Studies, Berlin, Germany, July 3-5 2014.

-

Parag K. Mital, Mick Grierson, and Tim J. Smith. 2013. Corpus-Based Visual Synthesis: An Approach for Artistic Stylization. In Proceedings of the 2013 ACM Symposium on Applied Perception (SAP ’13). ACM, New York, NY, USA, 51-58. DOI=10.1145/2492494.2492505

WEBSITE | ONLINE | PDF | PRESENTATION

-

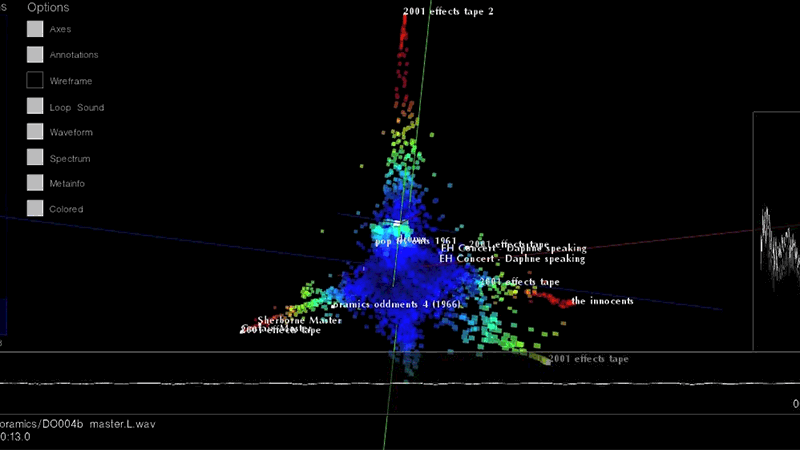

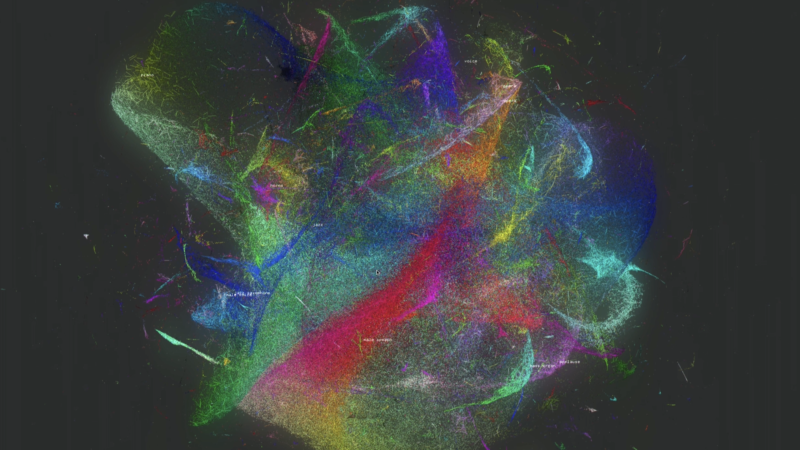

Parag K. Mital, Mick Grierson. Mining Unlabeled Electronic Music Databases through 3D Interactive Visualization of Latent Component Relationships. In Proceedings of the 2013 New Interfaces for Musical Expression Conference, p. 77. South Korea, May 27-30, 2013.

WEBSITE | PDF

-

Parag K. Mital, Tim J. Smith, Steven Luke, John M. Henderson. Do low-level visual features have a causal influence on gaze during dynamic scene viewing? Journal of Vision, vol. 13 no. 9 article 144, July 24, 2013.

ONLINE | POSTER

-

Tim J. Smith, Parag K. Mital. Attentional synchrony and the influence of viewing task on gaze behaviour in static and dynamic scenes. Journal of Vision, vol. 13 no. 8 article 16, July 17, 2013.

ONLINE

Tim J. Smith, Parag K. Mital. “Watching the world go by: Attentional prioritization of social motion during dynamic scene viewing”. Journal of Vision, vol. 11 no. 11 article 478, September 23, 2011.

ONLINE

-

Melissa L. Vo, Tim J. Smith, Parag K. Mital, John M. Henderson. “Do the Eyes Really Have it? Dynamic Allocation of Attention when Viewing Moving Faces”. Journal of Vision, vol. 12 no. 13 article 3, December 3, 2012.

ONLINE

-

Parag K. Mital, Tim J. Smith, Robin Hill, John M. Henderson. “Clustering of Gaze during Dynamic Scene Viewing is Predicted by Motion” Cognitive Computation, Volume 3, Issue 1, pp 5-24, March 2011.

PDF | VIDEOS

Teaching

-

2023

UCLA | Cultural Automation with Machine Learning

This course explores the past, present, and future of how artists engage with digital media through its remix and adaptation of increasingly more automated algorithms.

-

2020

UCLA | Cultural Appropriation with Machine Learning

UCLA DMA

This course guides students through state-of-the-art methods for generative content generation in machine learning (ML) with a special focus on developing a critical understanding surrounding its usage in creative practices. We begin by framing our understanding through the critical lens of cultural appropriation. Next, we look at how machine learning methods have enabled artists to create digital media of increasingly uncanny realism aided by larger and larger magnitudes of cultural data, leading to new aesthetic practices but also new concerns and difficult questions of authorship, ownership, and ethical usage.

-

2017-2018

CalArts | Audiovisual Interaction w/ Machine Learning

CalArts

This course guides students through using openFrameworks, integrating audiovisual interaction and machine learning into their practice, by using real-time processing and interaction as a guiding framework.

-

2016-2019

Kadenze Academy | Creative Applications of Deep Learning

Kadenze Academy

This is a course program in state of the art Deep Learning algorithms, led through a creative application of the algorithms. This unique course gets you up to speed with Tensorflow and interactive computing with Python and extends the introductory course into state of the art methods such as WaveNet, CycleGAN, Seq2Seq and more.

-

2013

Goldsmiths, University of London | Workshops in Creative Coding – Mobile and Computer Vision

Lecturer, Department of Computing @ Goldsmiths, University of London.

This is a 10-week Master’s course covering Mobile and Computer Vision development using the openFrameworks creative coding toolkit at Goldsmiths, Department of Computing. Taught to MSc Computer Science, MSc Cognitive Computing, MSc Games and Entertainment, MA Computational Arts, and MFA Computational Studio Arts students.

-

2012

V&A Museum | Audiovisual Processing for iOS Devices

Lecturer, Digital Studio, Sackler Centre @ Victoria & Albert Museum.

10 week course open to anyone covering the basics of iOS development.

-

2012

Goldsmiths, University of London | Introduction to openFrameworks

Lecturer, Department of Computing @ Goldsmiths, University of London.

This is a 4-week course covering the basics of openFrameworks taught to MA Computational Arts and MFA Computational Studio Arts students.

-

2012

Goldsmiths, University of London | Workshops in Creative Coding: Computer Vision

Lecturer, Department of Computing @ Goldsmiths, University of London.

This is a 5 week course covering Gesture and Interaction design as well as Computer Vision basics using the openFrameworks creative coding toolkit. Taught to MSc Computer Science, MSc Cognitive Computing, MSc Games and Entertainment, MA Computational Arts, and MFA Computational Studio Arts students.

-

2011

Srishti College | Center for Experimental Media Art: Interim Semester

Lecturer, Center for Experimental Media Arts @ Srishti School of Art, Design, and Technology.

Taught during the interim semester, the course entitled, “Stories are Flowing Trees”, introduced a group of 9 students to the creative coding platform openFrameworks through practical sessions, critical discourse, and the development of 3 installation artworks that were exhibited in central Bangalore. During the first week, students were taught basic creative coding routines including blob tracking, projection mapping, and building interaction with generative sonic systems. Following the first week, students worked together to develop, fabricate, install, publicize, and exhibit 3 pieces of artwork in central Bangalore at the BAR1 artist-residency space in an exhibition entitled, SURFACE, textures in interactive new media.

-

Various

Supervisor for 3 MSc Students on Augmented Sculpture. School of Arts, Culture, and Environment, University of Edinburgh, 2010

Supervisor for 6 MSc Students on Incorporating Computer Vision in Interactive Installation. School of Arts, Culture, and Environment, University of Edinburgh, 2009

Engineering and Sciences Research Mentor. Seminar. McNair Scholars, University of Delaware, 2007

Instructor. Web Design. McNair Scholars, University of Delaware, 2007

Teaching Assistant. Introduction to Computer Science. University of Delaware, 2006

Exhibitions

- 2025

Epoch Gallery, Los Angeles, CA, USA

- 2024

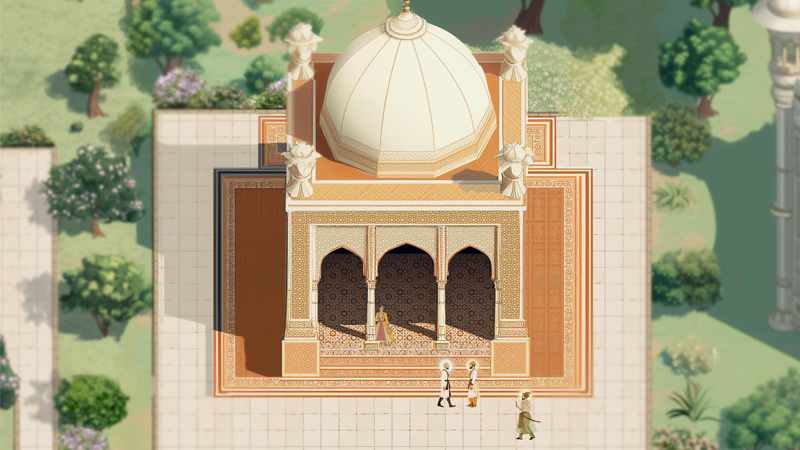

LACMA x Serendipity Arts Festival, Goa, India

Honor Fraser Gallery, Los Angeles, CA, USA

- 2018

Peripheral Visions Film Festival, NYC, USA

- 2016

Espacio Byte, Argentina

- 2015

- Re-Culture 4, International Visual Arts Festival, Patras, Greece

- Cologne Short Film Festival, New Aesthetic, Köln (Cologne), Germany

- Blackout Basel, Basel, Switzerland

- Prix Ars Electronica, Linz, Austria

- Oberhausen Short Film Festival, Oberhausen, Germany

- 2013

-

- 2012

-

- 2011

- SURFACES, Bengaluru Artist Residency 1 (BAR1), Bengaluru (Bangalore), India (Co-Curator and Artist)

- Bitfilm Festival, Goethe Institut, Bengaluru (Bangalore), India

- Oramics to Electronica, Science Museum. London, U.K.

- Edinburgh International Film Festival. Edinburgh, U.K.

- Kinetica Art Fair 2011, Ambika P3. London, U.K.

- 2010-11

Solo Exhibition, Waterman’s Art Centre, London, UK.

- 2010

- onedotzero Adventures in Motion Festival, British Film Institute (BFI) Southbank, London, UK.

- LATES, Science Museum, London, UK.

- Athens Video Art Festival, Technopolis. Athens, Greece

- Is this a test?, Roxy Arthouse, Edinburgh, UK.

- Neverzone, Roxy Arthouse, Edinburgh, UK.

- Dialogues Festival, Voodoo Rooms, Edinburgh, U.K.

- Kinetica Art Fair 2010, Ambika P3. London, U.K.

- Soundings Festival, Reid Concert Hall, Edinburgh, U.K.

- Media Art: A 3-Dimensional Perspective, Online Exhibition (Add-Art)

- 2009

- Passing Through, James Taylor Gallery. London, U.K.

- Interact, Lauriston Castle Glasshouse. Edinburgh, U.K.

- 2008

- Leith Short Film Festival, Edinburgh, U.K. June

- Solo exhibition, Teviot, Edinburgh, U.K. April

Talks/Posters

- Parag K. Mital, “Computational Audiovisual Synthesis and Smashups”. International Festival of Digital Art, Waterman’s Art Centre, 25 August 2012.

- Parag K. Mital and Tim J. Smith, “Investigating Auditory Influences on Eye-movements during Figgis’s Timecode”. 2012 Society for the Cognitive Studies of the Moving Image (SCSMI), New York, NY. 13-16 June 2012.

- Parag K. Mital and Tim J. Smith, “Computational Auditory Scene Analysis of Dynamic Audiovisual Scenes”. Invited Talk, Birkbeck University of London, Department of Film. London, UK. 25 January 2012.

- Parag K. Mital, “Resynthesizing Perception”. Invited Talk, Queen Mary University of London, London, UK. 11 January 2012.

- Parag K. Mital, “Resynthesizing Perception”. Invited Talk, Dartmouth, Department of Music. Hanover, NH, USA. 7 January 2012.

- Parag K. Mital, “Resynthesizing Perception”. 2011 Bitfilm Festival, Goethe Institut, Bengaluru (Bangalore), India. 3 December 2011.

- Parag K. Mital, “Resynthesizing Perception”. Thursday Club, Goldsmiths, University of London. 13 October 2011.

- Parag K. Mital, “Resynthesizing audiovisual perception with augmented reality”. Invited Talk for Newcastle CULTURE Lab, Lunch Bites. 30 June 2011 [slides][online]

- Hill, R.L., Henderson, J. M., Mital, P. K. & Smith, T. J. (2010) “Dynamic Images and Eye Movements”. Poster at ASCUS Art Science Collaborative, Edinburgh College of Art, 29 March 2010.

- Robin Hill, John M. Henderson, Parag K. Mital, Tim J. Smith. “Through the eyes of the viewer: Capturing viewer experience of dynamic media.” Invited Poster for SICSA DEMOFest. Edinburgh, U.K. 24 November 2009

- Parag K Mital, Tim J. Smith, Robin Hill, and John M. Henderson. “Dynamic Images and Eye-Movements.” Invited Talk for Centre for Film, Performance and Media Arts, Close-Up 2. Edinburgh, U.K. 2009

- Parag K. Mital, Stephan Bohacek, Maria Palacas. “Realistic Mobility Models for Urban Evacuations.” 2007 National Ronald E. McNair Conference. 2007

- Parag K. Mital, Stephan Bohacek, Maria Palacas. “Developing Realistic Models for Urban Evacuations.” 2006 National Ronald E. McNair Conference. 2006

Research/Technical Reports

- Parag K. Mital, Tim J. Smith, John M. Henderson. A Framework for Interactive Labeling of Regions of Interest in Dynamic Scenes. MSc Dissertation. Aug 2008

- Parag K. Mital. Interactive Video Segmentation for Dynamic Eye-Tracking Analysis. 2008

- Parag K. Mital. Augmented Reality and Interactive Environments. 2007

- Stephan Bohacek, Parag K. Mital. Mobility Models for Urban Evacuations. 2007

- Parag K. Mital, Jingyi Yu. Light Field Interpolation via Max-Contrast Graph Cuts. 2006

- Parag K. Mital, Jingyi Yu. Gradient Based Domain Video Enhancement of Night Time Video. 2006

- Parag K. Mital, Jingyi Yu. Interactive Light Field Viewer. 2006

- Stephan Bohacek, Parag K. Mital. OpenGL Modeling of Urban Cities and GIS Data Integration. 2005

Associated Labs

- Bregman Media Labs, Dartmouth College

- EAVI: Embodied Audio-Visual Interaction group initiated by Mick Grierson and Marco Gilles at Goldsmiths, University of London

- The DIEM Project: Dynamic Images and Eye-Movements, initiated by John M. Henderson at the University of Edinburgh

- CIRCLE: Creative Interdisciplinary Research in CoLlaborative Environments, initiated between the Edinburgh College of Art, the University of Edinburgh, and elsewhere.

Summer Schools/Workshops Attended

- Michael Zbyszynski, Max/MSP Day School. UC Berkeley CNMAT 2007

- Ali Momeni, Max/MSP Night School. UC Berkeley CNMAT 2007

- Adrian Freed, Sensor Workshop for Performers and Artists. UC Berkeley CNMAT 2007

- Andrew Benson, Jitter Night School. UC Berkeley CNMAT 2007

- Perry R. Cook and Xavier Serra, Digital Signal Processing: Spectral and Physical Models. Stanford CCRMA 2007

- Ivan Laptev, Cordelia Schmid, Josef Sivic, Francis Bach, Alexei Efros, David Forsyth, Zaid Harchaoui, Martial Hebert, Christoph Lampert, Ivan Laptev, Aude Oliva, Jean Ponce,

- Deva Ramanan, Antonio Torralba, Andrew Zisserman, INRIA Computer Vision and Machine Learning. INRIA Grenoble 2012

- Bob Cox and the NIH AFNI team, AFNI Bootcamp. Haskins Lab, Yale University. May 27-30, 2014.

Volunteering

- ISMAR 2010

- CVPR 2009

- ICMC 2007

- ICMC 2006